SAP Hybris (Spring) and WCS (J2EE) eCommerce Blog

SAP Hybris and IBM WCS experiences shared through the eyes of highly proficient, efficient and sufficient eCommerce veterans.

Saturday, April 30, 2016

Thursday, April 7, 2016

Design Considerations and Origins of the Logo

The core understanding of what makes a logo is what dictates how I approach all design, whether it is highly technical or creative. I'd like to share the constraints that are imposed on logo design so that I can convey similarity when exploring all design strategy.

There are basic elements that need to be considered when exploring logo design. Although they are declared elements in the design world, to me they are constraints. Constraints in my opinion dictate how I think in terms when considering all of the factors involved in outlining a design, where I confine my potential opportunities into what will actually work. The challenge to know whether a design will implement properly is that we cannot predict the future and reusing past historical evidence is based on perspective. New ideas, when evaluating novelty, require that guarantee that it will work. The most straightforward implementation evaluation is whether or not a logo is effective. Just look at the logo, if it doesn't make work at first glance, glossing it over for hours and hours isn't going to help. That is why I would emphasize being aware of the elements of what makes up a logo, that way, it is easier to ascertain whether a new idea in itself also will be effective.

Let's start by outlining the basics of logo design elements:

The main consideration of proportion with composition design is to achieve the Golden Ratio. You see the ratio everywhere, whether visible in the patterns of nature, the roots of architecture, art and as well, across almost every effective logo you are acquainted with. Most importantly, the human face composition, in which people acknowledge as one of the initial recognition patterns while in infancy, is one of the most widely used logos to promote decision making in marketing campaigns.

It is quite effective to at least review the proportions of a logo. When deciding on an effective design strategy the proportions of the logo itself dictates the perpetual user experience for the site. It is much like how facial recognition dictates your entire lifetime user experience. This may seem like an exaggeration, however, if it is overly emphasized, the site experience benefits reinforce the tenants of the organization.

Not suggesting to get too specific regarding the mathematical constraints imposed by the Golden Ratio. Measurements too specific may be easily implied using elements such as prominence and the impression of size. More creative approaches do accommodate the need to be scientifically correct. Beware to explore too much into the creative side and lose the simplicity of design. Keeping it simple in proportion allows opportunity to save attention towards the other elements of logo design being contrast, repetition and nuance.

When you want to include everything into a composition, complexity amplifies. The more simplified the message, there requires common traits that bridge between those subtle details together. When balancing both complexity and simplicity, that is where contrast gains value.

Logos usually include shapes, colo(u)rs and font. Two totally different font families really don't go well together. Although they do effectively contrast in definition, there is no relationship. Try to align the families of font in a close knit fashion where there is only a subtle different in kerning, alignment or emphasis. With shapes, positioning is key mostly in consideration with balance. Having two similar images of different sizes is also in definition contrasting, however, if they share the same axis, then the weight between the two appear competing. Changing the geometry of shapes can somewhat share the same axis. However, it is much better to overlap or integrate the shapes into a single entity, the contrast of that relationship is compelling. As for colo(u)rs, complement two together, without adding too many different colo(u)rs, unless they are shades of the same.

Logos usually include shapes, colo(u)rs and font. Two totally different font families really don't go well together. Although they do effectively contrast in definition, there is no relationship. Try to align the families of font in a close knit fashion where there is only a subtle different in kerning, alignment or emphasis. With shapes, positioning is key mostly in consideration with balance. Having two similar images of different sizes is also in definition contrasting, however, if they share the same axis, then the weight between the two appear competing. Changing the geometry of shapes can somewhat share the same axis. However, it is much better to overlap or integrate the shapes into a single entity, the contrast of that relationship is compelling. As for colo(u)rs, complement two together, without adding too many different colo(u)rs, unless they are shades of the same.

It is quite challenging to determine how to align contrast into a composition such as a logo. You could mark anything different anywhere and just declare that as contrast. The main idea is to be consistent. Consistency in how elements contrast establish that bridge that reinforces the message. Consistency can also be emphasized in repetition which is the next element of a logo.

The most visually pleasing experience to humans is the beauty perceived out of abstract form. To achieve a pleasing experience is to establish an axis, or a point of reference, then to facet around that, all forms that seem to have similarity. The most subtle way to enable symmetry is to implement repetition, which would imply sequence, create animation, and communicate more information that what could be contained in the singular objects themselves.

The most popular number of repetitions is 3 so that symmetry is not forced. If symmetry is too obvious, then the composition is exhausting. Multiple of 3's is also effective as long as there is an centre point.

By reviewing the IBM logo, for example, there does appear at first too many repetitions. The complexity of repetition is reinforced by the repetition of the letters which is actually the target number of 3, thus using that relationship between letters all sharing the same repetitive lines, creates a message of consistent coverage, all effectively communicated through a logo on the premise of repetition. If there were two letters or four letters, then the contrasting number of lines may not be as effective.

The most important element of design is the delicate attention to detail to such a degree that all other elements of repetition, contrast and proportion do not appear forced. At the same time, when everything is too structured or too organized, then no attention is garnered. The middle ground between structure and creativity is where nuance holds the design together.

If you are a fan of font styles, then you are really a fan of nuance. Most fonts have those small nuances which shows a different of a letter between another font. Those small differences of how the letter is represented is appealing.

Nuance is the hardest to measure directly. It is the tiny coordination between proportion, contrast and repetition which need to be reviewed. The outcome of the design analysis will tell you whether the combination of all elements of a design are subtle or forced. If the design seems forced in some manner, then readjusting one element may require to readjust the entire composition. This alone will impose hours and hours of detailed effort to ensure that nuance is achieved.

...

Elements of Logo Design

There are basic elements that need to be considered when exploring logo design. Although they are declared elements in the design world, to me they are constraints. Constraints in my opinion dictate how I think in terms when considering all of the factors involved in outlining a design, where I confine my potential opportunities into what will actually work. The challenge to know whether a design will implement properly is that we cannot predict the future and reusing past historical evidence is based on perspective. New ideas, when evaluating novelty, require that guarantee that it will work. The most straightforward implementation evaluation is whether or not a logo is effective. Just look at the logo, if it doesn't make work at first glance, glossing it over for hours and hours isn't going to help. That is why I would emphasize being aware of the elements of what makes up a logo, that way, it is easier to ascertain whether a new idea in itself also will be effective.

Let's start by outlining the basics of logo design elements:

Proportions

The main consideration of proportion with composition design is to achieve the Golden Ratio. You see the ratio everywhere, whether visible in the patterns of nature, the roots of architecture, art and as well, across almost every effective logo you are acquainted with. Most importantly, the human face composition, in which people acknowledge as one of the initial recognition patterns while in infancy, is one of the most widely used logos to promote decision making in marketing campaigns.

It is quite effective to at least review the proportions of a logo. When deciding on an effective design strategy the proportions of the logo itself dictates the perpetual user experience for the site. It is much like how facial recognition dictates your entire lifetime user experience. This may seem like an exaggeration, however, if it is overly emphasized, the site experience benefits reinforce the tenants of the organization.

Not suggesting to get too specific regarding the mathematical constraints imposed by the Golden Ratio. Measurements too specific may be easily implied using elements such as prominence and the impression of size. More creative approaches do accommodate the need to be scientifically correct. Beware to explore too much into the creative side and lose the simplicity of design. Keeping it simple in proportion allows opportunity to save attention towards the other elements of logo design being contrast, repetition and nuance.

Contrast

When you want to include everything into a composition, complexity amplifies. The more simplified the message, there requires common traits that bridge between those subtle details together. When balancing both complexity and simplicity, that is where contrast gains value.

Logos usually include shapes, colo(u)rs and font. Two totally different font families really don't go well together. Although they do effectively contrast in definition, there is no relationship. Try to align the families of font in a close knit fashion where there is only a subtle different in kerning, alignment or emphasis. With shapes, positioning is key mostly in consideration with balance. Having two similar images of different sizes is also in definition contrasting, however, if they share the same axis, then the weight between the two appear competing. Changing the geometry of shapes can somewhat share the same axis. However, it is much better to overlap or integrate the shapes into a single entity, the contrast of that relationship is compelling. As for colo(u)rs, complement two together, without adding too many different colo(u)rs, unless they are shades of the same.

Logos usually include shapes, colo(u)rs and font. Two totally different font families really don't go well together. Although they do effectively contrast in definition, there is no relationship. Try to align the families of font in a close knit fashion where there is only a subtle different in kerning, alignment or emphasis. With shapes, positioning is key mostly in consideration with balance. Having two similar images of different sizes is also in definition contrasting, however, if they share the same axis, then the weight between the two appear competing. Changing the geometry of shapes can somewhat share the same axis. However, it is much better to overlap or integrate the shapes into a single entity, the contrast of that relationship is compelling. As for colo(u)rs, complement two together, without adding too many different colo(u)rs, unless they are shades of the same. It is quite challenging to determine how to align contrast into a composition such as a logo. You could mark anything different anywhere and just declare that as contrast. The main idea is to be consistent. Consistency in how elements contrast establish that bridge that reinforces the message. Consistency can also be emphasized in repetition which is the next element of a logo.

Repetition

The most visually pleasing experience to humans is the beauty perceived out of abstract form. To achieve a pleasing experience is to establish an axis, or a point of reference, then to facet around that, all forms that seem to have similarity. The most subtle way to enable symmetry is to implement repetition, which would imply sequence, create animation, and communicate more information that what could be contained in the singular objects themselves.

The most popular number of repetitions is 3 so that symmetry is not forced. If symmetry is too obvious, then the composition is exhausting. Multiple of 3's is also effective as long as there is an centre point.

By reviewing the IBM logo, for example, there does appear at first too many repetitions. The complexity of repetition is reinforced by the repetition of the letters which is actually the target number of 3, thus using that relationship between letters all sharing the same repetitive lines, creates a message of consistent coverage, all effectively communicated through a logo on the premise of repetition. If there were two letters or four letters, then the contrasting number of lines may not be as effective.

Nuances

The most important element of design is the delicate attention to detail to such a degree that all other elements of repetition, contrast and proportion do not appear forced. At the same time, when everything is too structured or too organized, then no attention is garnered. The middle ground between structure and creativity is where nuance holds the design together.

If you are a fan of font styles, then you are really a fan of nuance. Most fonts have those small nuances which shows a different of a letter between another font. Those small differences of how the letter is represented is appealing.

Nuance is the hardest to measure directly. It is the tiny coordination between proportion, contrast and repetition which need to be reviewed. The outcome of the design analysis will tell you whether the combination of all elements of a design are subtle or forced. If the design seems forced in some manner, then readjusting one element may require to readjust the entire composition. This alone will impose hours and hours of detailed effort to ensure that nuance is achieved.

Conclusion

The reason why I posted about Logo considerations is because it actually dictates how to approach all design for any solution. The discipline of perceptive design actually applies to all facets of life, I would most likely regard that to the fact that everything we do is based on perspective and perceptive alignment. To be able to arrange a logo and to also analyse it's effectiveness contributes towards elegant design for all solutions, whether visual, technical or mechanical. Behind every design is a set of constraints, some known by all, and many more known by the experts - it is those hidden constraints that are more transparent following these key elements....

Monday, March 28, 2016

eCommerce Performance Assessment Process

The process to optimize performance for an eCommerce site is a collaboration between the development governance process as well as a systematic approach to focusing directly on capturing system statistics and providing analysis.

The performance of an eCommerce solution increases with concern as the intended audience opens up to non-structured public traffic. Reduction of the volume of support calls is enhanced when performance is optimized.

If development were to build directly into a production ready environment, then key performance indicators could be contained along with all regular unit testing. This is not the case for regular development cycles which leads towards a performance optimization plan. This plan would need to incorporate optimizing the solution beyond the means of development cycles. The advantage of having a performance cycle imposed into the delivery of an eCommerce solution and/or enhancement is that the team also increases their observed knowledge of how the system operates. This intellectual capital gained from learning system capabilities also provides input for future development enhancements.

As previously stated, the performance optimization efforts may not be able to tie directly in earlier states of development processes, however, there is still some mechanisms that should be considered in earlier stages of a project:

Once the data cache is enabled, then additional unit testing should be employed during the development cycle to ensure integrated applications still capably pull the intended data.

A planned application layer caching strategy which includes both TTL and invalidation mechanisms that can be automated and managed. The Content Delivery Network can also cache static content which could prevent up to 20% of all online activity from dependency to call the origin application landscape. Specifically for IBM WebSphere Commerce, the out-of-the-box dynacache capabilities can be easily incorporated into a solution, there are sample cache configuration files within the base samples directory.

With performance scheduled into a project as a phase or parallel path, there are many repeated cycles and iterations. This is based on the premise that any tuning or optimization introduced throughout the performance path needs to be precisely defined. That way, those metrics changed or tuned contribute towards an enhanced understanding of all the system parameters and indicators.

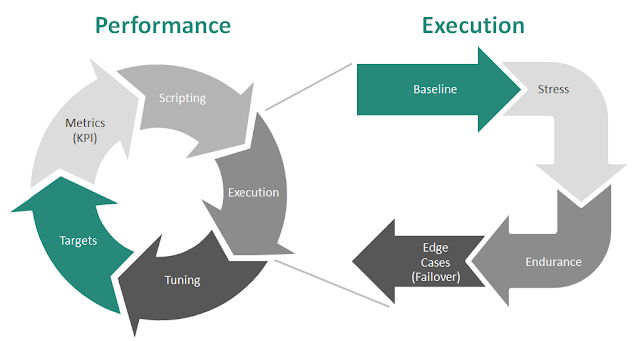

Below is a high level diagram of a suggested performance assessment process:

The cyclic depiction on the left indicates the many phases within the performance assessment process. The cycle can be repeated many times and in most cases with eCommerce solutions that have high transactions, there will be many repeated cycles over the life-cycle of delivery.

The scripts are written into a tool which can execute the operation automatically. There are such tools such as Apache jMeter or LoadRunner which can supplement this process for your team. Each individual script can be considered as a single operation such as search, or browse or checkout.

For each individual operation, there is input data that it must follow in order to execute. Most automated test tools provide a mechanism to systematically input data as input elements of the script.

That test data bucket of elements must be prepared prior to the execution.

The test tool which can understand these scripts would also need to be configured to operate with the environment.

As per the diagram above, there are many different iterations of the execution phase:

The response metrics captured during execution of these iteration phases can be used to compare against those different loads in conjunction with the various user workload groups.

On top of capturing response metrics from the tool, the team can also benefit from profiling of the system and code and align with the expected KPI's. Plus there are those support groups monitoring tools which can be reused to support findings during performance assessment.

The mutually exclusive monitoring tools can be groups into the following application layers:

Once execution is completed, the results are captured and then analysed by the team. The analysis turns into input for the tuning proposals.

Based on the new iteration of tests, then confidence increases that performance optimization can be achieved.

Below are some notable tuning adjustments that may be considered, it would be a good approach to keep track of these parameters throughout the process:

It should be noted that those monitoring tools that run during the performance assessment process should remain in the production environment ongoing. That way, real time traffic can be monitored and feedback and derive into targets which can be used as input for future isolated performance assessment process.

The performance of an eCommerce solution increases with concern as the intended audience opens up to non-structured public traffic. Reduction of the volume of support calls is enhanced when performance is optimized.

If development were to build directly into a production ready environment, then key performance indicators could be contained along with all regular unit testing. This is not the case for regular development cycles which leads towards a performance optimization plan. This plan would need to incorporate optimizing the solution beyond the means of development cycles. The advantage of having a performance cycle imposed into the delivery of an eCommerce solution and/or enhancement is that the team also increases their observed knowledge of how the system operates. This intellectual capital gained from learning system capabilities also provides input for future development enhancements.

As previously stated, the performance optimization efforts may not be able to tie directly in earlier states of development processes, however, there is still some mechanisms that should be considered in earlier stages of a project:

Development preparation

The development phase should ensure to include optimization with governance around using parametric queries over concatenated queries. That is basic knowledge in the development community. Other than newly created queries, all new and existing data access through the application should enable the WebSphere Commerce data cache and optimize the configuration.Once the data cache is enabled, then additional unit testing should be employed during the development cycle to ensure integrated applications still capably pull the intended data.

A planned application layer caching strategy which includes both TTL and invalidation mechanisms that can be automated and managed. The Content Delivery Network can also cache static content which could prevent up to 20% of all online activity from dependency to call the origin application landscape. Specifically for IBM WebSphere Commerce, the out-of-the-box dynacache capabilities can be easily incorporated into a solution, there are sample cache configuration files within the base samples directory.

Database preparation

For all new tables imposed on the schema, there should be monitoring of tablespace usage and/or defining new tablespaces to loosely couple memory footprint between the new tables and existing tables. The indexes for all tables should also be reviewed and refreshed with scheduled maintenance which will include all other ongoing clean-up routine. Also obsolete and aged data should be cleaned through database clean-up jobs which should be reviewed on a quarterly basis.Performance Assessment Process

With confidence that the development and database administration preparation is fulfilled, then the performance assessment can focus entirely on the system related metrics. Not suggesting that performance will not find challenges imposed through development, however, the effort to minimize the impacts will speed the process.With performance scheduled into a project as a phase or parallel path, there are many repeated cycles and iterations. This is based on the premise that any tuning or optimization introduced throughout the performance path needs to be precisely defined. That way, those metrics changed or tuned contribute towards an enhanced understanding of all the system parameters and indicators.

Below is a high level diagram of a suggested performance assessment process:

The cyclic depiction on the left indicates the many phases within the performance assessment process. The cycle can be repeated many times and in most cases with eCommerce solutions that have high transactions, there will be many repeated cycles over the life-cycle of delivery.

Targets

The process starts with determining the targets which includes capturing those non-functional requirements. Targets are influenced on factors such as return on investment, User Experience and infrastructure capabilities. These targets are input into the Key Performance Indicators.Metrics

The basis for key performance indicators in an eCommerce solution is the public traffic page views, shopping cart views and completed orders, all of which are measured per second, per hour at an average rate as well as peak. The peak rates are the best case performance optimization strategy so that campaigns whether controlled or based on event can be managed through the system landscape.Scripting

Once the metrics are prioritized, all of the interfaces that the public traffic encounters is stacked into various user workload groups. Those groups can be either registered or guest users that hit various features such as search, registration, general browse, pending order creation (cart), checkout and order submission. Each of these areas are given weight and distributed in such a way to best reflect the expectations of actual eCommerce traffic. Some of the expected weights can veer towards subjective determinants or factually based on objective analytic historical reports.The scripts are written into a tool which can execute the operation automatically. There are such tools such as Apache jMeter or LoadRunner which can supplement this process for your team. Each individual script can be considered as a single operation such as search, or browse or checkout.

For each individual operation, there is input data that it must follow in order to execute. Most automated test tools provide a mechanism to systematically input data as input elements of the script.

That test data bucket of elements must be prepared prior to the execution.

The test tool which can understand these scripts would also need to be configured to operate with the environment.

Execution

There are some performance test tools which can automatically execute the scripts to guide the simulation of predefined user workloads against the environment. As the tool runs, metrics are captured such as the CPU usage, memory utilization and operation completion response times.As per the diagram above, there are many different iterations of the execution phase:

- Baseline

- As with any statistical analysis, there requires a control group to compare results in order to dervive significant and confidence that an optimization path is realizable

- Stress

- Minimal stress can be attributed to those tests which directly meet the targets. Incremental stress testing can be used to equally monitor the response from the system and the application

- Endurance

- Testing that directly aligns with the actual intended operational activity of the eCommerce solution can capture significant response and demonstrate optimal effectiveness

- Edge

- Edge cases such as failover testing can align with expected support processes

The response metrics captured during execution of these iteration phases can be used to compare against those different loads in conjunction with the various user workload groups.

On top of capturing response metrics from the tool, the team can also benefit from profiling of the system and code and align with the expected KPI's. Plus there are those support groups monitoring tools which can be reused to support findings during performance assessment.

The mutually exclusive monitoring tools can be groups into the following application layers:

- Analytics

- Adobe Analytics or SiteCatalyst

- Google Analytics

- JVM Monitoring

- Database

- native db server performance monitoring tools

- Operating System

Once execution is completed, the results are captured and then analysed by the team. The analysis turns into input for the tuning proposals.

Tuning

Once tuning proposals are accepted, then the entire process is repeated so that the new captured response times are compared against the controlled previous result set.Based on the new iteration of tests, then confidence increases that performance optimization can be achieved.

Below are some notable tuning adjustments that may be considered, it would be a good approach to keep track of these parameters throughout the process:

- OS level

- CPU overhead to accommodate JVM Heap size for large pages

- TCP/IP timeout to release connections

- Web Layer

- Number of processes allowed

- Idle thread maximum allowance

- Enabling caching of static content. May also or exclusively use the CDN as the static cache is it has invalidation tooling. Assuming that the CDN is operational. See CDN solutions surch as Zenedge and Akamai

- Application Layer settings

- Database connection pool settings (JDBC)

- align connections with the web container threadpool size

- Statement Cache - Prepared statement cache depends on the usage of parametric queries used in the code

- Load Balancer

- Depending on whether reusing the firewall as a load balancer or using a dedicated device may impact the design of health checking.

- Cache Settings

- Disk offload location needs to manage disk memory. Also, using /tmp directory conflicts with other OS management

- Search layer (e.g. SOLR)

- JVM settings

- Web Container Threads is a sensitive setting due to synchronization issues under high load. Multi-threading eliminates synchronization and contention issues.

- Database Layer

- Database Manager

- Number of Database - tighten this value to inform database management of resources is redundant

- Database Configuration

- Locklist to align with the locks required by the eCommerce application

- Sortheap - may be required if queries depend highly on sorting logic

- Bufferpool thresholds for higher transaction workloads

- DB Self Tuning Features may conflict with raising bufferpool or sort heap sizes based on fluctuating activities. This could be controlled through endurance/stress testing

It should be noted that those monitoring tools that run during the performance assessment process should remain in the production environment ongoing. That way, real time traffic can be monitored and feedback and derive into targets which can be used as input for future isolated performance assessment process.

Tuesday, March 15, 2016

Improving the Quality of Adobe Dynamic Tag Management Delivery

The value of a tag manager is to provide a container to consume tags for analytic tools, AdWords integration, beacons for Floodlight or 3rd party alerting and dynamic tag deployment.

The Adobe Dynamic Tag Manager architecture distributes through multiple web properties, each containing global data elements, tightly coupled analytic integration tooling, along with flexible event and page rule management.

We would assume the most flexible set-up would be to create a single web property, hosted through a content delivery network or self-hosted library.

Within this single web property, there would be a production and staging version of tag management.

All of the lower life-cycle environments associate with the staging version of the DTM (Dynamic Tag Management) libraries.

The production live site would associate with the production version of DTM libraries, however, there is available a DTM Switch browser plugin for easy switching of debug mode and staging mode for dynamic tag management users. It is

available for both Google Chrome and Mozilla Firefox. This DTM Switch plugin will enable debugging, validating change as well as trapping the page load performance.

DTM has been designed to be intuitive, enabling marketers to quickly deploy tags to reduce dependency on IT.

DTM however cannot be entirely loosely coupled from the rest of the solution. There is common effort to optimize page load, reduce JavaScript errors as well as minimizing duplication.

The quality delivery of DTM may have some dependency on IT governance. This education entry hopes to address those areas where IT governance takes responsibility.

The only change required to integrate DTM into any solution would assume there is a common page rendering fragment such as the header, footer or some granular reference.

That single reference to the DTM library needs to be a synchronous file load into the web page compounding some load time.

The best route would be to impose the library into the CDN (content delivery network) so that it has the opportunity to be cached to dramatically minimize the impact.

Once the DTM library is loaded into the web page, then the inner functionality within DTM will deploy all of the injected script tools, data elements, event-based and page based rules.

As outlined in the diagram, it is assumed that all secondary threads (inner injected scripts) will load asynchronously, however, that deserves governance.

Part of the responsibility of IT governance is to keep track of all of the trigger rules imposed for each of the inner scripts.

The following page trigger rules each have various benefits:

There are general page load speed considerations which incorporates both the DTM as well as all other page rendering assets. Those general considerations are listed below:

There are essentially six areas that affect page load speed:

Specific for the DTM concerns, the IT governance needs to create a check-list and ensure the following details are satisfied across DTM and other rendered page assets:

The added responsibility of the IT group would be to focus on the above non-functional requirement of "Optimized timing and delivery sequencing that can be modified by the client".

The sequencing and timing can be managed through keeping track of all page trigger rules as outlined above.

Apart from DTM, there may be other scripts in the solution that is also depending on trapping user interaction on the rendered tags.

This does cause concern when double click protection against tags is required. jQuery has some bind/unbind requirements when treating event trapping.

Other solutions also use Dojo which also has feature rich functionality. The strategy is for IT to simply keep track of all event based rules whether in DTM and other script dependencies in the solution.

For double click prevention, these assets should always be regression tested upon any event-based rule change in DTM.

The general workflow in DTM does enable the opportunity for IT to govern the code through review.

For every change in DTM, there is a historical comparison between change, as well, there is notation coverage to which the marketing team to assign comments to associate with each change.

Additionally, the IT governance team can respectively notate comments to align with change.

The most important workflow state is the publish operation.

As outline in the diagram, all changes are queued into a "Ready to Publish" state to which can easily be tracked and reviewed. Once the changes are reviewed, the "Publish" operation will push all the changes that can be delivered into production.

As part of the review operation, below are some considerations for the DTM coding best practices

It is best for the reviewer to request from the DTM code change recipient what was covered in the unit testing and how it was addressed.

As suggested earlier, there is a DTM Switch tag manager that does enable some testing in production, which would influence the most realistic unit testing results.

However, testing in production does not assume absolute unit testing coverage. Based on review of unit testing coverage, a review may be able to brainstorm some outstanding concerns that may need to be addressed in unit testing.

The easiest approach to unit testing review is to always enter in the unit test cases into the notes under the change entry for the submission.

Even though DTM does have outstanding seamless error handling (through the iFrame injection and or separation of tag script responsibility), there are still those cases where expected tags are missing on the page which breaks dependent code. In some cases, when JavaScript breaks, the entire site could break.

Especially if the code is executing site wide, on every page, where some granular pages may not include those expected tags or contain custom formatting which is not under the control of the DTM scope.

However, with IT governance, re-write that 3rd party code in a manner which ensures that it can easily be understood in the details apart from depending on the title of the rule to define the operation.

Whenever tags are consumed for their data, always first check if the element exists, then check if the element is null, then consume the element data, then the code should be safe.

Additionally, whenever iterating elements or sub-elements of the page tags, check first if those elements exist first before initiating the iteration.

Wrap those logging with a debug flag so that only the staging version of the code is tracing the verbose to the browser console. There is no value for end users to view the browser console activity.

Tools, AdWords, 3rd party beacons, floodlights and other operations tend to use the same tag information in the page.

Most of the tags are in various areas of the page and some of the tag data may not exist.

One operation the IT team could provide benefit to the DTM team is to supply a foundation of tag data available on each page through the solution.

Remember, Dynamic Tag Management depends on one main factor - tags and the information those tags carry.

Create a set of hidden tags which the same class name that contain the required information that can easily be consumed by DTM.

That way, the DTM scripts can easily iterate over the acknowledge class and with the array subsequently inspect each tag, inspect the information, operate the logic and contain the integration points.

This will reduce the dependency on ensuring there is that alignment between spurious tagging expected on pages, while enhancing the operational support when integrating with analytics and 3rd parties.

The diagram below illustrates the tracker alerting capability:

For each of the trackers that are listed, you can click into those communication specifications to drill into the data that has been collected while also validating the endpoint integration with the 3rd parties. You can capture the communicated URL's that are constructed out of the trackers and share that with your integration point of contacts.

Below is a snapshot of the trackers that are exposed through the Tag Assistant:

Tag assistant provides a lot more debugging insight to help you understand where there may be errors in the tagging scripts. Google Tag Assistant is tightly coupled with known trackers so only specific implementations can be debugged.

The added value of the Tag Assistant add on is that it can provide details low level tag information based on the metrics intended to be communicated to the third parties.

Below is a sample of what appears in the metrics report for a particular tracker:

Based on these addons reviewed above, you can get adequate coverage to validate that the tag management scripts are effectively capturing the right information and communicating with third parties as per the specification expectations.

The Adobe Dynamic Tag Manager architecture distributes through multiple web properties, each containing global data elements, tightly coupled analytic integration tooling, along with flexible event and page rule management.

We would assume the most flexible set-up would be to create a single web property, hosted through a content delivery network or self-hosted library.

Within this single web property, there would be a production and staging version of tag management.

All of the lower life-cycle environments associate with the staging version of the DTM (Dynamic Tag Management) libraries.

The production live site would associate with the production version of DTM libraries, however, there is available a DTM Switch browser plugin for easy switching of debug mode and staging mode for dynamic tag management users. It is

available for both Google Chrome and Mozilla Firefox. This DTM Switch plugin will enable debugging, validating change as well as trapping the page load performance.

DTM has been designed to be intuitive, enabling marketers to quickly deploy tags to reduce dependency on IT.

DTM however cannot be entirely loosely coupled from the rest of the solution. There is common effort to optimize page load, reduce JavaScript errors as well as minimizing duplication.

The quality delivery of DTM may have some dependency on IT governance. This education entry hopes to address those areas where IT governance takes responsibility.

General Delivery Architecture

Every page within the solution needs to have a pointer to the DTM library along with a satellite invocation point.The only change required to integrate DTM into any solution would assume there is a common page rendering fragment such as the header, footer or some granular reference.

That single reference to the DTM library needs to be a synchronous file load into the web page compounding some load time.

The best route would be to impose the library into the CDN (content delivery network) so that it has the opportunity to be cached to dramatically minimize the impact.

Once the DTM library is loaded into the web page, then the inner functionality within DTM will deploy all of the injected script tools, data elements, event-based and page based rules.

As outlined in the diagram, it is assumed that all secondary threads (inner injected scripts) will load asynchronously, however, that deserves governance.

Part of the responsibility of IT governance is to keep track of all of the trigger rules imposed for each of the inner scripts.

The following page trigger rules each have various benefits:

- Top of Page

- Some 3rd party integrations may require global injection so that the script is loaded before subsequent page assets - this imposes strain on page load optimization

- Sequential HTML: Injected into <HEAD/> below DTM library include script if <SCRIPT/> tags are used, otherwise is injected at top of <BODY/>

- Sequential JavaScript global: Injected into <HEAD/> below DTM include script as JavaScript include <SCRIPT/>

- Sequential JavaScript local: Injected into <HEAD/> below DTM include script as JavaScript include <SCRIPT/>

- Non-sequential JavaScript: Injected as asynchronous <SCRIPT/> in <HEAD> below DTM library include script

- Non-sequential HTML: Injected as hidden IFRAME and does not affect page HTML

- Bottom of Page

- Assets that need to be loaded before the page is ready to operate javascript operation - this imposes strain on the page load optimization

- Sequential HTML: Injected after _satellite.pageBottom() callback script with document.write() prior to DOMREADY so that there is no destruction of the visible page

- Sequential JavaScript global: Injected after _satellite.pageBottom() as JavaScript include <SCRIPT/>

- Sequential JavaScript local: Injected after _satellite.pageBottom() as JavaScript include <SCRIPT/>

- Non-sequential JavaScript: Injected as asynchronous <SCRIPT/> in <HEAD> below DTM library include script

- Non-sequential HTML: Injected as hidden IFRAME and does not affect page HTML

- DOM Ready

- Assets that can be incorporated into the re-structuring of rendered tags just before javascript operation begins - this is the safest strain on page load optimization

- Sequential HTML: Will not work because DOMREADY is active and document.write() will overwrite the page

- Sequential JavaScript global: Injected into <HEAD/> below DTM include script as JavaScript include <SCRIPT/>

- Sequential JavaScript local: Injected into <HEAD/> below DTM include script as JavaScript include <SCRIPT/>

- Non-sequential JavaScript: Injected as asynchronous <SCRIPT/> in <HEAD> below DTM library include script

- Non-sequential HTML: Injected as hidden IFRAME and does not affect page HTML

- Onload (window load)

- Assets that can fire javascript operation at any time after all other javascript operations may have completed, this is usually used for firing off pixel beacons to third parties. This option is optimize for page load operation.

- Sequential HTML: Will not work because DOMREADY is active and document.write() will overwrite the page

- Sequential JavaScript global: Injected into <HEAD/> below DTM include script as JavaScript include <SCRIPT/>

- Sequential JavaScript local: Injected into <HEAD/> below DTM include script as JavaScript include <SCRIPT/>

- Non-sequential JavaScript: Injected as asynchronous <SCRIPT/> in <HEAD> below DTM library include script

- Non-sequential HTML: Injected as hidden IFRAME and does not affect page HTML

Page load speed improvements

Based on the above concerns for the trigger rules, there is a high level approach to improving page load speed.There are general page load speed considerations which incorporates both the DTM as well as all other page rendering assets. Those general considerations are listed below:

There are essentially six areas that affect page load speed:

- Caching of site assets

- Creating site pages from the server

- Reducing the number of downloaded files

- Reducing the size of downloaded files

- Improving the connection speed to source files

- Converting sequential file loading to parallel file loading

Specific for the DTM concerns, the IT governance needs to create a check-list and ensure the following details are satisfied across DTM and other rendered page assets:

- Self-hosted static file delivery that eliminates the dependency on third-party hosting and DNS

- Parallelization of tag loading through asynchronous delivery

- Tag killing options with timeouts that can be modified by the client

- Dramatically reduced file size through the optimized dynamic tag management library schema

- Enhanced file compression and delivery

- Optimized timing and delivery sequencing that can be modified by the client

- Client-side delivery of file assets that eliminates any additional server-side processing

The added responsibility of the IT group would be to focus on the above non-functional requirement of "Optimized timing and delivery sequencing that can be modified by the client".

The sequencing and timing can be managed through keeping track of all page trigger rules as outlined above.

Event-based rules best practices

There are instances to add additional analytic based on events such as button clicks or other user interaction.Apart from DTM, there may be other scripts in the solution that is also depending on trapping user interaction on the rendered tags.

This does cause concern when double click protection against tags is required. jQuery has some bind/unbind requirements when treating event trapping.

Other solutions also use Dojo which also has feature rich functionality. The strategy is for IT to simply keep track of all event based rules whether in DTM and other script dependencies in the solution.

For double click prevention, these assets should always be regression tested upon any event-based rule change in DTM.

Publish Workflow

Across the tools as well as the rules based scripts, IT should provide governance over the coding best practices.The general workflow in DTM does enable the opportunity for IT to govern the code through review.

For every change in DTM, there is a historical comparison between change, as well, there is notation coverage to which the marketing team to assign comments to associate with each change.

Additionally, the IT governance team can respectively notate comments to align with change.

The most important workflow state is the publish operation.

As outline in the diagram, all changes are queued into a "Ready to Publish" state to which can easily be tracked and reviewed. Once the changes are reviewed, the "Publish" operation will push all the changes that can be delivered into production.

As part of the review operation, below are some considerations for the DTM coding best practices

DTM Coding Best Practices

Based on my previous education, Improving the quality of WebSphere Commerce customizations, regarding the delivery of quality eCommerce solutions, there are some key points that can be reiterated here for DTM coding.Common themes of unit testing

Part of the responsibility to review submitted code is to ensure there is unit testing coverage.It is best for the reviewer to request from the DTM code change recipient what was covered in the unit testing and how it was addressed.

As suggested earlier, there is a DTM Switch tag manager that does enable some testing in production, which would influence the most realistic unit testing results.

However, testing in production does not assume absolute unit testing coverage. Based on review of unit testing coverage, a review may be able to brainstorm some outstanding concerns that may need to be addressed in unit testing.

The easiest approach to unit testing review is to always enter in the unit test cases into the notes under the change entry for the submission.

Practising defensive programming techniques

This is the single most important factor in governing code change especially when it comes to JavaScript.Even though DTM does have outstanding seamless error handling (through the iFrame injection and or separation of tag script responsibility), there are still those cases where expected tags are missing on the page which breaks dependent code. In some cases, when JavaScript breaks, the entire site could break.

Especially if the code is executing site wide, on every page, where some granular pages may not include those expected tags or contain custom formatting which is not under the control of the DTM scope.

Readable code

Keep the code readable. Most of the time, the 3rd party may send over some coding that can easily be incorporated into DTM. Most cases it is straightforward to title the rule based on the 3rd party and expected external support for the script.However, with IT governance, re-write that 3rd party code in a manner which ensures that it can easily be understood in the details apart from depending on the title of the rule to define the operation.

Handle nulls correctly

Although it may seem repetitive to continually check for nulls for every single variable, do it so that the code is protected. Optimistic coders always assume the best and they may always be right. However, that one time there is a null or invalid data in a parameter, the code fails and when it is JavaScript that is imposed on every page of a component, the entire solution could fail.Whenever tags are consumed for their data, always first check if the element exists, then check if the element is null, then consume the element data, then the code should be safe.

Additionally, whenever iterating elements or sub-elements of the page tags, check first if those elements exist first before initiating the iteration.

Catch everything

Every script which depends on reading tags, every script which pulls data, please provide the try catch section. That way, if there is any unknown errors, at least the error will not disrupt the operation as well will not display in the browser debugger console. JavaScript makes the catch easy so it does not add much overhead to impose this as a constraint that needs to be code reviewed.Logging

It is very useful to debug output to the browser console. The reason this is helpful is because the DTM scripts can then be validated during the page and dynamic script operation.Wrap those logging with a debug flag so that only the staging version of the code is tracing the verbose to the browser console. There is no value for end users to view the browser console activity.

Tag Data Enhancement

If you read this far into this education material, then here is some bonus material for you that will really help the entire solution.Tools, AdWords, 3rd party beacons, floodlights and other operations tend to use the same tag information in the page.

Most of the tags are in various areas of the page and some of the tag data may not exist.

One operation the IT team could provide benefit to the DTM team is to supply a foundation of tag data available on each page through the solution.

Remember, Dynamic Tag Management depends on one main factor - tags and the information those tags carry.

Create a set of hidden tags which the same class name that contain the required information that can easily be consumed by DTM.

That way, the DTM scripts can easily iterate over the acknowledge class and with the array subsequently inspect each tag, inspect the information, operate the logic and contain the integration points.

This will reduce the dependency on ensuring there is that alignment between spurious tagging expected on pages, while enhancing the operational support when integrating with analytics and 3rd parties.

Validation

When the tag manager submissions are published, the site will consume the changes and the tag behaviour will engage. There requires the real-time validation to ensure that tag information is collected properly and communicating with 3rd parties. There are two tools which can help facilitate this validation:Ghostery

The Ghostery plugin quickly and easily installs into the browser. Once it is configured and enabled, it will begin providing feedback to the browser to alert all of the trackers that are executing on any web page.The diagram below illustrates the tracker alerting capability:

For each of the trackers that are listed, you can click into those communication specifications to drill into the data that has been collected while also validating the endpoint integration with the 3rd parties. You can capture the communicated URL's that are constructed out of the trackers and share that with your integration point of contacts.

Google Tag Assistant

The Google Tag Assistant behaves much like Ghostery in that it responds to the activity that is captured and communicated to third parties based on the tagging scripting.Below is a snapshot of the trackers that are exposed through the Tag Assistant:

Tag assistant provides a lot more debugging insight to help you understand where there may be errors in the tagging scripts. Google Tag Assistant is tightly coupled with known trackers so only specific implementations can be debugged.

The added value of the Tag Assistant add on is that it can provide details low level tag information based on the metrics intended to be communicated to the third parties.

Below is a sample of what appears in the metrics report for a particular tracker:

Based on these addons reviewed above, you can get adequate coverage to validate that the tag management scripts are effectively capturing the right information and communicating with third parties as per the specification expectations.

Subscribe to:

Posts (Atom)